Co-written with Regis Amichia, Data Science Lead, Foxintelligence

For a downloadable document, click here.

Over the last years, many organizations have been investing substantially in data and analytics. The objective is to become more data-driven and become a tech-style organization. Companies willing to go further than just symbolically profiling the organization invest in AI to go from descriptive analytics to predictive and prescriptive analytics. This requires a solid data and AI governance program, IT infrastructure that makes all data readily available in a so-called data lake, and piloting of the organization through a carefully selected key performance indicator portfolio. It has been widely documented, however, that the most important hurdle is the change to a culture that embraces agility and experimentation. In fact, it is the humans that need reskilling. As a consequence, training programs have been launched and large organizations can now boast about their hundreds of use cases created by interdisciplinary teams which are shared on an internal repository for further development and innovation. The hard question comes next: what is the return on these huge investments? Why are so little AI use cases in production and where is the generation of tangible value? There seems to be a gap that needs to be filled and MLOps are bringing part of the answer.

Before going into MLOps, let us take one step back. It has always been a brain teaser for the software development community to find the best methodology for project management. It started with the waterfall approach, introduced in the 70s by Winston Royce. This linear approach defines several steps in the software development lifecycle: requirements, analysis, design, coding, testing, and delivery. Each stage must be finished before starting the next and the clients only see the results at the end of the project. This methodology creates a “tunnel of development” between gathering the client requirements and the delivery of the project. For many years, this linear approach has been the cause of tremendous loss in resources. An error in the design stage or the clients changing their mind required rebooting the development process. Furthermore, engineering teams were clustered in different stages (developers for coding, QA teams for testing and Sysadmin for delivering) which created friction and a fertile ground for communication errors. This is one of the reasons which led to a new methodology which started around 2001: the agile approach.

Agile principles have infused the software engineering culture for more than 20 years. It has endowed companies with the ability to adapt to new information rather than following an immutable plan. In a fast-changing business environment, it is more a question of survival than a simple change of methodology. Now, companies put customer involvement and iteration at the heart of the software development process. They bring together engineers with complementary skills within teams coordinated by product managers to regularly release pieces of software, gather feedback and adapt the roadmap accordingly. This was a true revolution, but it was not perfect: there was still a gap between software development and what happens after the software is released, also known as operations. In 2008, Patrick Debois and Andrew Clay filled this gap with the DevOps (contraction of development and operations) methodology. By bringing all teams (software developers, QA and Sysadmin) together in the development and the operations processes, waiting times are reduced and everyone can work more closely, in order to develop better solutions.

Back to today, what can bring DevOps today in the era of artificial intelligence? The needs are the same: companies are looking for methodology to develop and scale AI algorithms to generate value and reap the benefits of their investments. Data leaders recently began to investigate the benefits of the Devops methodology. However, machine learning and AI algorithms have a peculiarity that drastically differentiate from traditional software: the data.

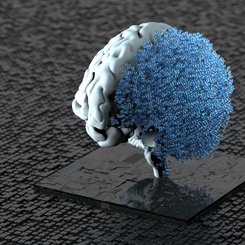

Data is everywhere and has become a tremendous source of value for companies. The recent advances in fundamental research and the democratization of machine learning through open-source solutions has made artificial intelligence accessible for all. Data scientists are one of the most sought-after profiles in the current job market as they promise to be the key factor in unlocking the value of data. But in the same way that software developers needed Devops methodology to maximize their productivity and scale software development in controlled and secured environments, data scientists need a framework to develop and scale AI-powered solutions. Since those solutions are different from traditional software, they need to be managed accordingly. Therefore it is essential to use Devops practices, but data leaders also need to acknowledge the singularity of using data within software that makes decisions autonomously. This is where Machine Learning Operationalization (MLOps) comes to rescue.

MLOps is a set of practices, bringing Devops, machine learning and data engineering together to deploy and maintain ML systems in production. This is the missing piece which allows organizations to release the value contained in data using artificial intelligence. With formalization and standardization of processes, MLOps fosters experimentation but also guarantees rapid delivery, to scale machine learning solutions beyond their use case status. Once the solutions are in production and consume new data, monitoring predictive performance is key. Universal outperforming ML solutions for specific solutions simply don’t exist, hence organizations need monitoring predictive performance in real time. MLOps helps monitor this performance and acts in case deterioration due to concept drift occurs. The automation of the collection of lifecycle information of algorithms, that is tracking what has been recalibrated by whom and why, allows improving the learning process and reporting to auditors if required. Hence, accountability and compliance issues can be addressed.

While most data training programs focus on the elements of machine learning, statistics and coding, and work on use cases in a sandbox environment, MLOps principles are not yet covered extensively. Furthermore, business leaders invest in AI without fully understanding how to create an efficient development and operations environment for their data teams. Filling the gap between data and operations is not straightforward. The complexity of ML algorithms, often considered as a black box run by data scientists who are supposedly the only ones in the company to understand what they are doing, separates others from the development process and creates another gap between AI and business.

MLOps does not only concern engineers: every stakeholder of data-based solutions should be involved. The revolution of artificial intelligence is undoubtedly happening now, and all those who intend to be part of it will have a role in creating and running MLOps processes in their organization. Future data leaders should acquire basic MLOps skills in their training programs to remove the harmful and unnecessary boundary between business leaders and engineering teams around data-related topics.