The influence of social networks on the democratic debate is the consequence of the choices made by their algorithms rather than to the messages posted by specific individuals, however misleading they may be. Regulation of algorithmic recommendations is possible in order to limit their political influence.

The Brexit referendum and the US elections have shown the political influence that social media recommendation algorithms command. These days, the CEOs of Twitter and Facebook disagree about how best to react to Donald Trump's misleading interventions. Reasons are to be sought in the economic model of each of these companies, their influence and the specific dynamics of social networks. But are the solutions they advocate sufficient?

The success of recommendations

Twitter and Facebook are both financed by advertising and their interest is therefore to maximize the depth of their network, the audience of the interventions and the amplitude of the reactions in order to collect ever more information on the tastes, interests and preferences of their users. This allows them to suggest the most relevant ads to us or, like Netflix which modifies the covers of films and series based on our reactions, to present us with ads in the form that will have the most influence on our behavior.

In his 2007 book "The long tail", Chris Anderson, the founder of Wired magazine, already pointed out the major difference between selling in stores or online: for the latter, the catalog immediately available for purchase is not constrained by the physical storage of references and can therefore generate significant income via a wide variety of recommendations. This, of course, is the reason for the success of Amazon or Netflix.

A priori, recommendation algorithms refer to our own past behavior. They classify us in a group of individuals with similar tastes, according to the rule that "if you liked this, we advise you that". The goal of an algorithm is not to deceive us, but, in the short term, to generate the most clicks, reactions and shares. They are generally supplemented by so-called "A/B testing" where two alternatives with similar content (A and B) will be consecutively submitted to us to determine which makes us react more. Recommendations become increasingly specialized. This polarization phenomenon leads us to receive only what strengthens tastes and opinions.

Recommendation or influence?

These companies not only influence our buying behavior, but also our political opinions, because these recommendations are not neutral. In France, a newsagent has an obligation to offer all the magazines published nationwide but, for lack of space, she will choose to favor some and relegate others. Each reader only buys from a single store, so she is subject to the editorial choice of the merchant. Yet, the large number and heterogeneity of points-of-sale means that, at the national level, the population as a whole maintains a certain autonomy in its choices. The presence of regional distribution networks with a specific promotion policy for certain titles could, however, prevent the dissemination of certain opinions via the constitution of local quasi-monopolies. Online, the sector of broadcast media is concentrated around a few operators, this is the result of the so-called law of increasing returns from individual data. The consequence is that a broadcaster, Netflix, OCS or others, has a significant political role via its recommendations: in the current context of awareness around "Black Lives Matter", the broadcaster can for example choose – or not – to highlight Gone with the Wind or documentaries such as Ouvrir la Voix, where Director Amandine Gay films black women discussing the daily discrimination they suffer.

In the United States, the polarization of the media and the emergence of highly partisan television channels (Fox News, MSNBC…) were made possible when the Federal Communications Commission, headed by a close adviser to Ronald Reagan, abolished the “fairness doctrine" in August 1987. Established in 1949, this principle required the media to deal with important current affairs with contrasting arguments, although this sometimes took the form of merely presenting "pros" and "cons" without depth. To avoid presenting an editorial policy that would make them responsible for content, Facebook and Twitter have so far decentralized the question of the choice and prioritization of information sources without controlling the message, as allowed by a 1996 law. They present themselves as simple apolitical "platforms" and not as broadcasters responsible for editorial content. Donald Trump and Twitter are testing the limits of this interpretation today. The firm can afford it because its model is that of the megaphone: its economic profitability is based on the ability of everyone to speak to the greatest number. The value of Facebook, on the contrary, rests on the strength of the links between its subscribers, on their proximity: the profitability of its model depends on the quality of the placement of ads, rather than just their number. Indirectly, and similar to the model of Netflix, Facebook relies on its knowledge of its “catalog” of users and on the relevance of its recommendations.

A safeguard of algorithms

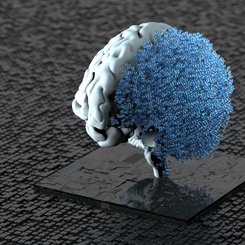

The question for law enforcement and democratic debate is therefore not so much about the individual content of messages posted online (as long as they remain within the limits of the law) but of controlling algorithmic recommendations or a liberal laissez-faire. The key issue is that of the concentration of social networks to a few operators and, therefore, the major influence their algorithms possess. Moreover, these algorithms can generate information bubbles where new recommendations only reinforce our preconceptions – so they become potential sources of rumors and populism – so the central question concerns access to information.

Fortunately, this question of "robustness" of the democratic debate could be directly addressed through the formulation of recommendations. Indeed, the problem can be seen from the angle of over-optimization of algorithms which target our past behaviors and whose suggestions do not give enough attention to serendipity, the possibility to discover new and unexpected interests. Indeed, the recent success of artificial intelligence techniques is exactly related to the joint development of mathematical and computer optimization tools (which allow us to tackle ever more complex problems) and to that of "machine" or statistical learning, the principle of which is to introduce into the algorithms a penalty in the event of over-optimization. The justification for this penalty is that a model that is over-optimized at a given time (or over a given dataset) does not hold over time: less accurate but more reliable recommendations are better than apparently more precise short-term predictions. Such overfitted models, but which can cause significant problems. the following. Like a gear, too tightly adjusted and without any backlash, would jam at the slightest vibration.

Further, if the heart of the problem lies in controlling the recommendations, it is possible for the public regulator to act directly on the specification of the algorithms to reduce the risks – as was done in another context after the financial crisis of 2008 via the international agreements of Basel III and Solvency II. The latter took note of the risks of financial crises generated by a few grains of sand introduced into the wheels of overly perfectly optimized financial derivative products – we remember the notorious CDS, these “credit-default swaps” which until 2008 mixed optimally (as it was believed) various securities, including the notorious American "subprime mortgage" which were the origin of the crisis. International agreements now target precise indicators of the risk incurred and impose minimum values for the ratios of assets (minimum compulsory reserves), debt (maximum leverage) or diversification of investments (the minimum Value-at-Risk) on banks and insurers. This strategy can be transposed to controlling the penalty for over-optimization of algorithms that present a political impact in order to protect the diversity of information and the democratic agora. For instance, by calculating a minimal required ratio of quality of algorithmic predictions or by imposing a minimum weighting of recommendations that do not directly relate to past behaviors.

Controlling the backlash in the recommendation mechanisms is essential to ensure its plasticity and therefore robustness. Simple indicators make it possible to measure these and the authorities could relatively easily analyze them in order to reinforce the distinction between suggestion and influence.